In the past, I wrote about robust regression. This is an important tool which handles outliers in the data. Roger Koenker is a substantial contributor in this area. His website is full of useful information and code so visit when you have time for it. The paper which drew my attention is “Quantile Autoregression” found under his research tab, it is a significant extension to the time series domain. Here you will find short demonstration for stuff you can do with quantile autoregression in R.

The data for this tutorial is the Euro-zone Misery index which can be found here . It is a monthly frequency time series with the sum: (unemployment rate + inflation rate) composing the so called “Misery index”.

Start with loading the data and checking what do different Information Criteria say about the number of lags in the model:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

options(digits = 4) library(quantreg) # For quantile regression library(qpcR) # for the Hannan Quinn Criterion y = ts(rev(read.table("https://www.dropbox.com/s/46opp9t18m0nmjz/mindex-08-12.txt?dl=0",sep = "\t",header = F)[,2]) , frequency = 12, start = c(1995,1) ) plot(y, main = "Misery Index over Time", ylab = "Index", lwd = 2, ty = "b",cex.main = 2) lagorder = function(x,maxorder){ # maxorder is the maximum lag number you allow ordmax = maxorder ; HQ = NULL ; AK = NULL ; SC = NULL ; lagmat = NULL l1 = length(x) for (i in 1:ordmax){ lagmat = cbind(lagmat[-i,],x[(1):(l1-i)]) # lagged matrix armod <- lm(x[(i+1):l1]~lagmat) HQ[i] = HQIC(armod) AK[i] = AIC(armod) SC[i] = BIC(armod) } return(c(which.min(HQ), which.min(AK),which.min(SC) )) } lagorder(y, maxorder = 12) # They all agree that one lag is enough: # 1 1 1 |

Now estimate quantile autoregression, one for for each quantile in 0.05 increments.

|

1 2 3 4 5 6 7 8 9 10 |

TT = length(y) lm0 = lm(y[-1]~y[-TT]) ; summary(lm0) tauseq = seq(.05,.95,.05) qslope = NULL ; qr0 = list() for (i in 1:length(tauseq)){ qr0[[i]] = rq(y[-1]~y[-TT], tau = tauseq[i]) qslope[i] = qr0[[i]]$coef[2] } |

A look at the result:

|

1 2 3 4 5 6 7 8 9 10 |

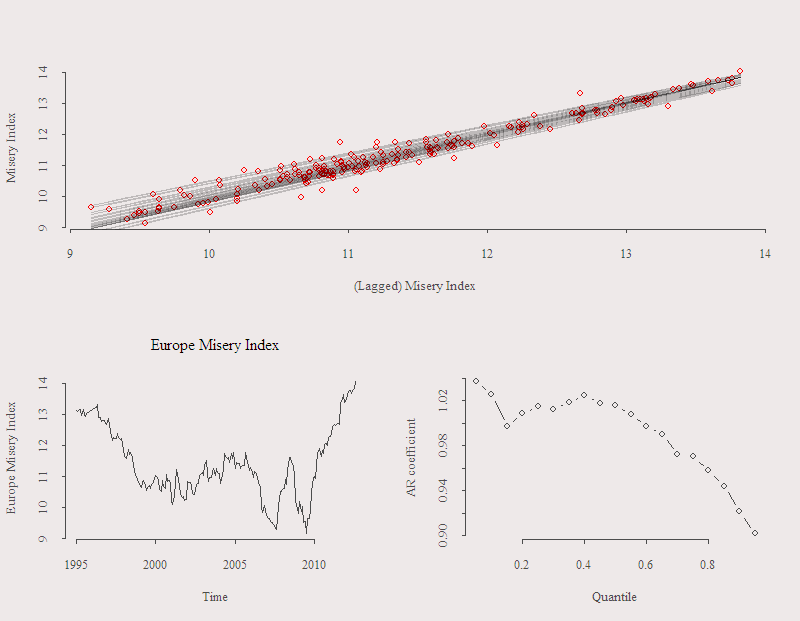

layout(matrix(c(1,1,2,3), 2, 2, byrow = TRUE)) layout.show(3) plot(y, ylab = "Europe Misery Index", main = "Europe Misery Index") plot(qslope~tauseq, ty = "b", xlab = "Quantile", ylab = "AR coefficient") plot(y[-1]~y[-TT], col = 2 , ylab = "Misery Index", xlab = "(Lagged) Misery Index") for (i in 1:length(tauseq)){ lines(qr0[[i]]$fit~y[-TT], lwd = .02, col = rgb(0, 0, 0, 0.2) ) } |

Top, the fitted lines are superimposed in gray. In the case of constant AR coefficients (constant across quantiles) we should get lines which are parallel to each other as the only change is the quantile you wish to fit. In this case, we can see in the bottom right panel that the AR coefficients are not constant. For fitting low quantiles, the process behaves like a random walk, while a strong mean reversion is observed for high quantiles. This asymmetry suggests the process is heteroskedastic, the variance in the lower end is higher than in the higher end so we get “fan” like figure instead of parallel lines. The economic meaning of it is that when this index is high, steps are takes to depress it. Steps like lowering the cost of borrowing which in turns allow struggling companies to stay afloat and successful companies to maintain investment levels. Point is that when the index is high, we try to depress it, while when it is in its middle range it can go both ways, hence the “fan” shape.

Notes

(1) There is less dispersion when the lag index is higher, and more dispersion when the lag index is lower. This is a conditional quantile autoregression, it does not answer the question “what will happen to the dispersion of the index when I change the lag by small amount?”, to answer this kind of questions you will need to read about unconditional quantile regression, which is not discussed here. (unconditional on the level of the index..)

(2) The fitted lines should not cross each other as they do in the graph above. When this happens interpretation is very problematic, there are ways to prevent these crossing to improve interpretation.

In another context, you can try this tool for estimating Value-at-Risk, with quantile equal 0.05 for the 5% VaR value. Keep in mind that you need a large sample for accuracy in this case, as only 5% of the observations will have information relevant to determine the fitted value. Maybe interesting so see how the estimate of VaR from quantile regression compares with the common garch(1,1) etc.

What I especially like about quantile regression tool is that the only assumption is very “light”, only that the functional form is linear, no Gaussianity or such, so it is very general. Plus is at the ready for R users thanks to Roger Koenker.

I cannot run the last part of the lasr code:

for (i in 1:length(tauseq)){

lines(qr0[[i]]$fit~y[-TT], lwd = .02, col = rgb(0, 0, 0, 0.2) )

}

The error message returns:

error in (function (formula, data = NULL, subset = NULL, na.action = na.fail, :

variable lengths differ (found for ‘y[-TT]’)

>

Check the length(qr0[[i]]$fit), it should be as the same length as length(y[-TT]), if it is not the case you zoom in on the actual numbers and see what is the problem.

Hello Eran –

I just read this posting and I’m starting to become interested in quantile regression so maybe you can answer this question for me. Would the AR coefficients be biased if there were autocorrelation in the residuals? If so, how would you account for it?

Intuitively, I think the forecast are will not be unbiased, but the CI will probably be too wide. I think if you spot auto-correlation in the residual maybe better to use different transformation (Diff on Diff for example).

Thanks

Thanks for reading.

Hi Eran,

I am trying to run the example, seems that the data file is not available now. could you please share the dataset?

Thank you

is there any difference in the quantile regression process if the time series data is non stationary

Good question. I think the same reasons to have stationary data when it comes to the squared loss function (“mean” regression) apply for least absolute deviation loss function (“median” regression).

hi,

can you share the codes to run quantile autoregression of order 2