Some knowledge about the bootstrapping procedure is assumed.

In time series analysis, Information Criteria can be found under every green tree. These are function to help you determine when to stop adding explanatory variables to your model.

The most famous is the AIC (Akaike information criterion):

The second most famous is the SIC (Schwarz information criterion, aka Bayesian information criterion BIC):

The least famous out of these three is the HQ (Hannan-Quinn information criterion):

K is the number of parameters, N is the number of observations, and L is the log-likelihood for the model. We stop adding variables when the criterion is in it’s minimum. They all have solid underlying theory but since we can, we avoid diving into the math.

The idea is very simple, when you add a regressor, the model is

more complex (more parameters, k –> higher) but

will fit better (higher likelihood, L –> higher).

What all these guys are doing is comparing the added complexity with the added goodness of fit. For example, for the AIC, you add an extra variable, , and

. If the increase in likelihood is higher than the “penalty” (+2, which is constant for this criterion), AIC goes lower, and so we keep on adding. If not, it means that the improvement in the fit is not enough to compensate for the added complexity, so we stop adding. Ain’t life grand?

In the previous post I provided the code for bootstrapping time series. We can use it to check how comfortable we feel with each of these above functions, for selecting the number of lags in an autoregression. Same data as before, the Misery index. Inflation and unemployment numbers concerning the Eurozone (17 countries) can be found here. I summed them up to get the index and made it public via the dropbox public folder.

Here is a function to return the number of lags chosen by each criterion:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

library(qpcR) # for the Hanna Quinn Criterion library(tseries) y = ts(read.table("https://dl.dropbox.com/u/9409065/mindex1.txt", header = F, sep = "\t")[,2] ,frequency = 12, start = c(1995,6) ) # The function: lagorder = function(x,maxorder){ # maxorder is the maximum lag number you allow ordmax = maxorder ; HQ = NULL ; AK = NULL ; SC = NULL ; lagmat = NULL l1 = length(x) for (i in 1:ordmax){ lagmat = cbind(lagmat[-i,],x[(1):(l1-i)]) # lagged matrix armod <- lm(x[(i+1):l1]~lagmat) HQ[i] = HQIC(armod) AK[i] = AIC(armod) SC[i] = BIC(armod) } return(c(which.min(HQ), which.min(AK),which.min(SC) )) } lagorder(y, maxorder = 14) # They all agree: [1] 1 1 1 # one lag is enough here.. |

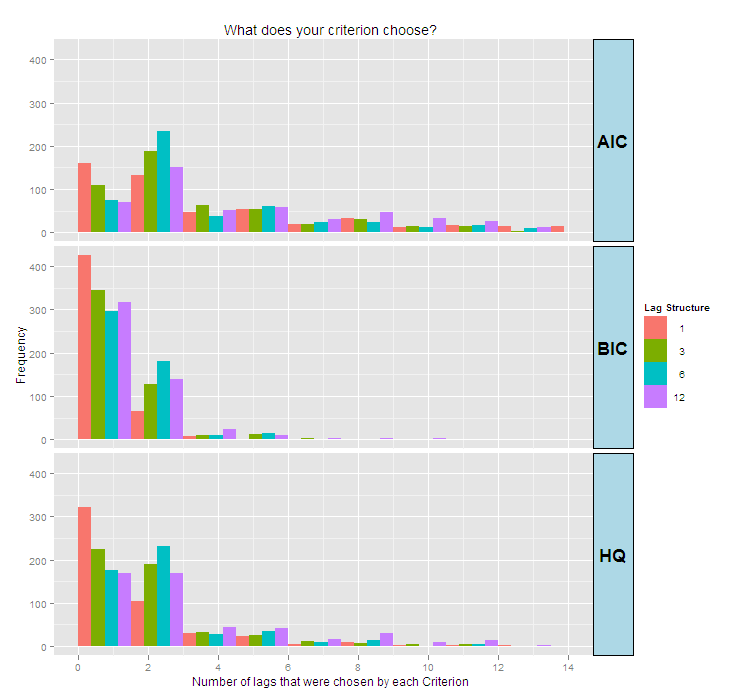

Now for the interesting part (interesting?? really??), let’s use the code from the previous post , bootstrap the series, and have a look at the distribution of each criterion. I use the Bootstrap based on IID innovations code. In order to get the residuals we need to determine lag structure, let’s see what happens for different lag structures, {1,3,6,12}. That is, I estimate the series using these (4 models), get the residuals and bootstrap new series. Once done, criteria take their pick at the number of lags they think is most suitable. This takes few seconds.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

ord = c(1,3,6,12) numsim = 500 numobs = 100 ts.sim = array(dim = c(numobs, numsim, length(ord))) # 100 simulation, each 100 obs IC = array(dim=c(numsim, length(ord), 3)) # this guy holds number of lags chosen according to the 3 IC's for (i in 1:length(ord)){ for (j in 1:numsim){ ts.sim[,j,i] <- mean(y) + arima.sim(list(order = c(ord[i],0,0), ar =ar.ols(y, demean = T,intercept=F, order.max = ord[i],aic = F)$ar ), n = numobs, sd = sd(y)/2) IC[j,i,] = lagorder(ts.sim[,j,i], maxorder = 14) ### Plot the results: dat = data.frame(LagOrder = ord[c(sort(rep(1:4,numsim)))], SimNumber = rep(c(1:100),4), AIC = c(IC[,,2]), BIC =c(IC[,,1]), HQ =c(IC[,,3])) dat = data.frame(LagOrder = rep(ord[c(sort(rep(1:4,numsim)))],3) ,CritFound = c(IC[,,1:3]), Crit = c(rep("HQ",4*numsim),rep("AIC",4*numsim),rep("BIC",4*numsim))) pl = ggplot(dat, aes((CritFound), fill= as.factor(LagOrder))) + geom_bar(position="dodge", width = 0.5,binwidth = 1.5)+ facet_grid(Crit ~ .)+opts(title = "What does your criterion choose?") + labs(x = 'Number of lags that were chosen by each Criterion', y = "Frequency", fill = 'Lag Structure')+ scale_x_continuous(limits = c(0, 14))+ opts(strip.text.y = theme_text(size=18, face="bold"), strip.background = theme_rect(fill="lightblue")) print(pl) }} |

The colors represent the “true” (simulated) lag structure, and the X axis stands for the number of lags chosen by the criteria. For each lag structure we have 500 simulated series, so this is essentially a fancy histogram.

So, what do we want and what do we have?

We want all criteria to choose the right number of lags, we want one high orange bar at x = 1, one high green bar at x = 3 and so forth, an “oracle” picture for example would have a high orange bar at lag one on tha X axis and no orange bars at higher lags. We can see for example that the AIC have many orange bars at high number of lags, this means that although we drew from a lag structure of one (orange), the criterion chooses a higher number in many of the bootstrapped samples. When we draw from lag structure of 12, we optimally would have very high purple bar at lag 12 and very few purple bars at lower lags. However, we see that although we drew from a lag structure of 12 (purple), all criteria chose much smaller number. What else?

We see that for higher (“true” or simulated) lag structure, the AIC performs best, the price is paid when the “true” number of lags is small and the AIC continue to add irrelevant lags in comparison with the other two criteria. The AIC penalty term (2K) is independent of sample size, moving from “adding lag number 10” to “adding lag number 11” still bears a penalty of 2, which is not that high when you compare it with the BIC for example. The BIC considers also how many data points you use, and since in this case log(“length of series”) is higher than 2, the penalty is higher, so an extra lag is heavier penalized relative to the AIC.

For lower number of lags, BIC is best, choosing the right number most of the time, again with the price of a high probability for a mistake when the (“true”) number of relevant lags is high. The HQ is somewhere in between.

In sum

An observation from this informal experiment is to consider AIC when you suspect longer memory and have relatively few data points, BIC when you suspect shorter memory and have relatively high number of observations, and HQ when you have no idea and no guess, which should not happen often in practice, of course best to check all three using the function above, and maybe they all agree which will help.

Thank you very much for your blog which is very helpful in almost every case.

Under the link you will find a full search within some (ARMA) DGP class by AIC, BIC, SIC which can be plotted as heatmap as well.

This procedure helped me to get a feeling for the choice of information criterion as well as its behaviour.

Hi Stefan, thank for this.

Hello Eran,

The link for the misery data does not work anymore. Would you be able to upload it again? or maybe explain further how to calculate such measurement. I am currently writing my bachelor thesis, and wish to execute Sieve bootstrap method 🙂

Best regards

Thanks for letting me know. Now fixed (under the “public” link).