Note: I usually write more technical posts, this is an opinion piece. And you know what they say: opinions are like feet, everybody’s got a couple.

Machine learning is simply statistics

A lot of buzz words nowadays. Data Science, business intelligence, machine learning, deep learning, statistical learning, predictive analytics, knowledge discovery, data mining, pattern recognition. Surely you can think of a few more. So many you can fill a chapter explaining and discussing the difference (e.g. Data science for dummies).

But really, we are all after the same thing. The thing being: extracting knowledge from data. Perhaps it is because we want to explain something, perhaps it is because we want to predict something; the reason is secondary. All those terms fall under one umbrella which is modern statistics, period. I freely admit that the jargon is different. What is now dubbed feature space in machine learning literature is simply independent, or explanatory variables in the statistical literature. What one calls softmax classifier in the deep learning context, another calls multinomial regression in a basic 1-0-1 statistics course. Feature engineering? Call it variable transformation rather.

So the jargon is different for whatever historical reasons. However, most if not all of those fancy, data-hungry algorithms that we call machine learning are simply statistics with a strong computational engine which propels them. And that is well and fine. Fantastically useful even, but still statistics.

So why the debate?

What’s the reason for the uprising of those numerous and growing additional terms? There are reasons. Improved computational power and the nose-dive drop in digital storage prices gave rise to special abilities. The ability to fit data without a story, without a model, without an underlying reasoning, without a prior hypothesis. In the past, prior to the accumulation of those powerful C/GPUs, statisticians put a lot of effort developing a theory which would justify the numerical procedure to follow. In the past, statistical ideas could not always be extensively validated empirically due to the lack of computing powers. Those ideas must have therefore be theoretically sound to make us trust them. That kind of trust used to be super important. But trust can also be developed in a different manner. Nowadays for example, one can simply apply a numerical recipe and viola: the machine learns by itself. Trust is developed by applying the procedure to different data and check that performance is satisfactory.

This is really the biggest and perhaps only difference between old and modern statistics. In machine learning you don’t need to specify a model. You don’t need any story, or theoretical justification behind what you are doing. For example, you don’t need to ask the computer to find the best regression line, you don’t need to tell the computer if the line a straight line, or a very wiggly line. You only need to ask the computer to find the best fit. This is very generic request you forward to the machine. You only need to specify how you would like it done, which numerical algorithm should be used. This does not mean that you are not doing statistics.

There exists however some discussion on the difference between fitting the data with a model, and fitting the data model\story-free. A fascinating reference is Statistical Modeling: The Two Cultures. In the discussion part of the paper, Prof. Bradley Efron writes: “At first glance Leo Breiman’s stimulating paper looks like an argument against parsimony and scientific insight, and in favor of black boxes with lots of knobs to twiddle. At second glance it still looks that way,..”

What we still see however, is that the need to understand why a particular numerical algorithm performs well is still there. We usually don’t ship results coming from uninterpretable model as is, even if they are good in explaining\predicting. Regulation does not allow it. But more importantly, our intellectual curiosity does not allow it. We are not (yet) comfortable believing that an algorithm can, after a few hours diagnose cancerous tumors better than an experienced MD. This is similar to the discomfort I am sure to feel cruising 100KM per hour in my first driverless car ride. But we are slowly relaxing this discomfort, it looks to me.

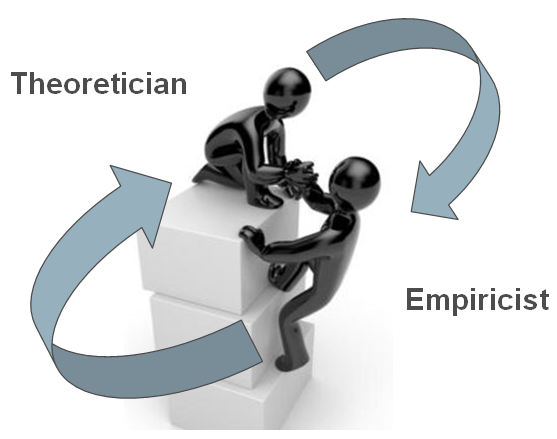

Still a lot of research effort is directed towards understanding why some numerical algorithms succeed\fail on particular data. Couple of decades ago, walking in the corridors of an exact science faculty you could have noticed that scientists who develop the theory have a certain patronizing air to them. The empiricist was the butler, the theorist was the landlord. Now things are somewhat reversed. Theoreticians are now lagging behind. While a numerical algorithm is shown, empirically but forcefully, to work well- theory is not yet developed. In fact, theory is secondary at best as far as most practitioners are concerned. It is however still statistics, just adapted to the new tools of the modern age.

Cool excellent. Strong opinion.

techniques like deep learning and reinforcement learning are what makes machine learning different from statistics.