In the last few decades there has been tremendous progress in the realm of volatility estimation. A major step is the additional use of intraday price path. It has been shown that estimates which consider intraday information are more accurate. Which is to say they converge faster to the real unobserved value of the true volatility.

It makes perfect sense. The usual proxy you’ve heard about, only uses 2 information points, close-to-close, the following measures use much more information. They are termed range based, as they examine the range at some interval (one minute in my example) and sum them up to get the desired daily estimate. So, more information since they consider the full path in each day.

I present three estimators. They have solid theory to back them up, however, as with any step forward, we face new tough decisions. I refer specifically to the number of intervals, (here one minute intervals so 390). From my experience it is quite important and it has to do with market micro-structure and the bid-ask spreads, in the references you can find a related paper. Let’s move on to the R code:

Here are three options, in all formulas are high, low, close and open prices for interval i respectively.

Parkinson (1980):

(1)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# Parkinson Volatility Estimator Park = function(x){ # x is an array of prices with dimension: # (num.intervals.each.day)*( (5 or 6), "open", "high" etc)*(number.days) n <- dim(x)[1] # number of intervals each day. l <- dim(x)[3] # number of days, most recent year in my this post pa = NULL for (i in 1:l) { log.hi.lo <- log( x[1:n,2,i]/x[1:n,3,i] ) pa[i] = sum( log.hi.lo^2 ) / (4*n*log(2)) } return(pa) } |

Garman Klass (1980):

(2)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# Garman-Klass Volatility Estimator GarmanKlass = function(x){ # x is an array of prices with dimension: # (num.intervals.each.day)*( (5 or 6), "open", "high" etc)*(number.days) n <- dim(x)[1] # number of intervals each day. l <- dim(x)[3] # number of days gk = NULL for (i in 1:l) { log.hi.lo <- log( x[1:n,2,i]/x[1:n,3,i] ) log.cl.to.cl <- log( x[2:n,4,i]/x[1:(n-1),4,i] ) gk[i] = ( sum(.5*log.hi.lo^2) - sum( (2*log(2) - 1)*(log.cl.to.cl^2) ) ) /n } return(gk) } |

Rogers and satchell (1991):

(3)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# Roger and Satchell Volatility Estimator RogerSatchell = function(x){ # x is an array of prices with dimension: # (num.intervals.each.day)*( (5 or 6), "open", "high" etc)*(number.days) n <- dim(x)[1] # number of intervals each day. l <- dim(x)[3] # number of days rs = NULL for (i in 1:l) { log.hi.cl <- log( x[1:n,2,i]/x[1:n,4,i] ) log.hi.op <- log( x[1:n,2,i]/x[1:n,1,i] ) log.lo.cl <- log( x[1:n,3,i]/x[1:n,4,i] ) log.lo.op <- log( x[1:n,3,i]/x[1:n,1,i] ) rs[i] = sum( log.hi.cl*log.hi.op + log.lo.cl*log.lo.op ) /n } return(rs) } |

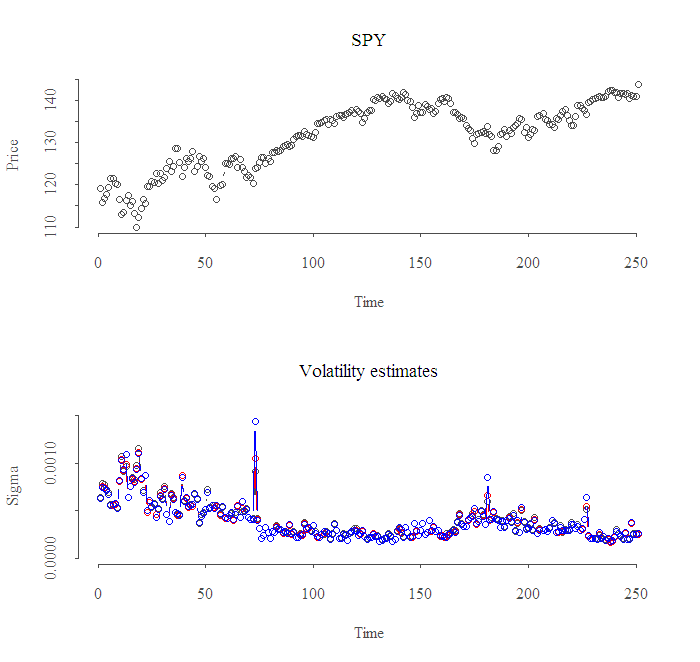

The result for the most recent year is:

As you can see the correlation between the estimates is very high, which is in some sense comforting since it assures code validity, yet it also means that it does not really matter with which one you choose to go.

|

1 2 3 4 5 6 7 |

cor(cbind(sqrt(v1),sqrt(v2),sqrt(v3)), use = "complete.obs") [,1] [,2] [,3] [1,] 1.000 0.989 0.997 [2,] 0.989 1.000 0.977 [3,] 0.997 0.977 1.000 |

Thanks for reading.

Notes and references:

1. Credit for Jeffrey A. Ryan for the hard work of interfacing R with IB. (IBrokers package)

2. The Paper: Range-based Covariance Estimation mentioned in the post. Another sources are:

—

3. To get the data I use from IB, I am not familiar with a source for intraday quotes. Will be great if someone comments on one.

eran you can use this very professional book on the subject: Option Volatility & Pricing: Advanced Trading Strategies and Techniques