Introduction

When hopping from univariate volatility forecasts to multivariate volatility forecast, we need to understand that now we have to forecast not only the univariate volatility element, which we already know how to do, but also the covariance elements, which we do not know how to do, yet. Say you have two series, then this covariance element is the off-diagonal of the 2 by 2 variance-covariance matrix. The precise term we should use is “variance-covariance matrix”, since the matrix consists of the variance elements on the diagonal and the covariance elements on the off-diagonal. But since it is very tiring to read\write “variance-covariance matrix”, it is commonly referred to as the covariance matrix, or sometimes less formally as var-covar matrix.

If you are still reading this, I can imagine you have some experience in modelling correlation. The link between correlation and covariance is direct, given that you know the variance of the individual series:

So when I first looked at this subject I did not understand why do we need the colossal literature describing all kinds of multivariate models. Why not model all the off-diagonal individually, using for example a rolling window of the sample pairwise correlation? quickly replied, you want to have a valid correlation matrix, which means symmetric (easy enough to impose) and positive-semidefinite, also known as nonnegative-definite (not easy to impose).

First, why the nonnegative-definite property is important, and second, why it is not easy to impose. Think about nonnegative-definite imposition as the multivariate equivalent to the positivity imposition on volatility in the univariate case. You don’t want to have your machine spit out negative volatility do you? In the univariate case multiplying by any squared number, we trivially remain in positive territory. In a higher dimension, ensuring “positivity” of the covariance involves multiplying, not by a squared scalar, but by a “squared” vector.

Denote XC as the centered random variable X, so . Now by definition

is a covariance matrix, clearly nonnegative-definite. Now if we multiply the matrix by a “squared” vector,

we can “insert” the vector into the expectation (because (1) the vector is not a random variable, and (2) linearity of the expectation operator). We (should) still get nonnegative-definite matrix:

It does not matter which vector

you use, since it is “squared”. This is the origin to the algebraic reverse: A symmetric matrix

of size

is positive semi-definite if and only if

for all possible vectors

(of size

of course).

If we model the covariance entries individually, pairwisely, and “patch” them together into a matrix, placing each pairwise covariance in the right place (for example the covariance between variable 1 and variable 3 in entries and

, there is no guarantee that we eventually end up with a nonnegative-definite matrix. Since there is no covariance matrix which is nonnegative-definite, then what we can potentially get an invalid covariance matrix. Practitioners, free from a tedious academic refereeing process, may get away with this theoretical blunder. However, in addition there are other problems, computational in nature. A nonnegative-definite matrix can have zero or negative determinant. In many Bayesian application we would like to use precision matrix instead of the covariance matrix. To compute precision matrix, we simply invert the covariance matrix, but this means that we divide by the determinant, so a determinant which is zero creates a problem if you are not Chuck Norris, who can supposedly divide by zero as the rumor goes.

The main building block in the literature is the GARCH process. Say we have a random variable , we can model its volatility using:

(1)

Easy enough to understand. What is important for the volatility today is the volatility yesterday , with specific emphasis on the shock yesterday,

. Remember that if

then

is simply an estimate of the variance of

only, without accounting for anything dating back past t-1.

Up the dimension

Now, add another random variable . You now have 2 volatilities and a covariance term. But why not extend this process in the same way Vector Auto Regression (VAR) extends Auto regression? Enter VEC model:

(2)

where is a vectorize operator, taking a matrix and stacking it as a vector. Naturally, because of the symmetry of the matrix, we don’t need all the coefficients, so for clarity here is a better representation:

(3)

The intuition behind this model is the same as that underlying the VAR. Maybe when volatility of equities is high, volatility in bonds is lower, maybe when volatility in bonds is high, covariance with equities is high, etc.

A potential problem with this model, also analogues to the VAR, is that maybe volatilities are independent processes, meaning that only the diagonal of A and B matters, in which case we simply trouble the model with unnecessary estimation noise. Another computational issue mentioned before, is that since we do not model the matrix-process per se, but the three terms one by one, we don’t ensure the result is a valid covariance matrix, in particular no nonnegative-definite constraint is imposed. The BEKK model (Baba, Engle, Kraft and Kroner, 1990) makes this advancement, the curious reader is referred to the original paper. There is a good reason not to discuss those “first-generation” models in length. They are very difficult to estimate for more than a handful of variables. I have not tried those personally, it is a hearsay from those unfortunate souls who did. For those models, even if one is successful in estimation, the complications in estimation casts a heavy shadow on the results as far as practitioners are concerned.

CCC and DCC

A surge in the literature followed the next sizable step delivered by Engle (2002) in his seminal paper: “Dynamic Conditional Correlation: A Simple Class of Multivariate Generalized Autoregressive Conditional Heteroskedasticity Models”. From the abstract: “These (models) have the flexibility of univariate GARCH models coupled with parsimonious parametric models for the correlations.” Key entry point to this class of conditional correlation models is to realize that

(4)

with being a matrix with the volatility of the individual series (now estimated individually) on the diagonal and zeros off-diagonal. This is just a manipulation in matrix form of the usual equation we started with:

, since

. Now few things are in place:

Now we estimate.

Estimation using R

Let’s get some data. We pull the last few years of three ETF’s. The SPY (tracking the S&P 500), TLT and IEF (tracking long and medium term US bonds respectively).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

library(quantmod) k <- 3 # how many years back? end<- format(Sys.Date(),"%Y-%m-%d") end_training <- "2015-01-01" start<-format(Sys.Date() - (k*365),"%Y-%m-%d") sym = c('SPY', 'TLT', "IEF") # S&P 500, long and medium term bonds, all ETFs l <- length(sym) dat0 = getSymbols(sym[1], src="yahoo", from=start, to=end, auto.assign = F, warnings = FALSE,symbol.lookup = F) n = NROW(dat0) dat = array(dim = c(n,NCOL(dat0),l)) ; ret = matrix(nrow = n, ncol = l) for (i in 1:l){ dat0 = getSymbols(sym[i], src="yahoo", from=start, to=end, auto.assign = F,warnings = FALSE,symbol.lookup = F) dat[1:n,,i] = dat0 ret[2:n,i] = (dat[2:n,4,i]/dat[1:(n-1),4,i] - 1) # returns, close to close, percent } ret <- na.omit(ret)# Remove the first observation time <- index(dat0)[-1] TT <- NROW(ret) colnames(ret) <- sym |

Now to demonstrate how to construct covariance matrix using the CCC and DCC models. We first get the univariate volatilities. We need them, they sit on the diagonal of the diagonal matrix . We estimate them using asymmetric GARCH with heavy tails.

|

1 2 3 4 5 6 7 8 9 |

library(rugarch) # Alexios Ghalanos (2014). rugarch: Univariate GARCH models. R package version 1.3-4. gjrtspec <- ugarchspec(mean.model=list(armaOrder=c(1,0)),variance.model =list(model = "gjrGARCH"),distribution="std") # std is student t distribution Uvolatility_fit <- matrix(nrow=(TT),ncol=l) # container of a matrix to hold the volatilities of the three assets for (i in 1:l){ Tgjrmodel = ugarchfit(gjrtspec,ret[,i]) Uvolatility_fit[,i] = as.numeric(sigma(Tgjrmodel)) } |

Now, once we have the meat in the sandwich , we are able to create the CCC and DCC-based covariance matrices. For the CCC (constant conditional correlation), we use the sample correlation matrix, and for the DCC (dynamic..), we use correlation matrix which is estimated based on a moving window of say 3 months.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

# create a the covariance of the CCC model nassets <- l # for better readability, the l looks too much like 1. # make containers for the matrices over time: samp_cor_tv <- cov_dcc <- cov_ccc <- array(dim=c(nassets, nassets, TT)) # Compute the sample unconditional correlation matrix: samp_cor <- cor(ret) # will remain constant throughout the loop wind <- 60 # roughly three months for (i in (wind+1):TT){ dt <- (Uvolatility_fit[i,])*diag(nassets) cov_ccc[,,i] <- dt %*% samp_cor %*% dt samp_cor_tv[,,i] <- cor(ret[(i-wind):i,]) cov_dcc[,,i] <- dt %*% samp_cor_tv[,,i] %*% dt } |

Results

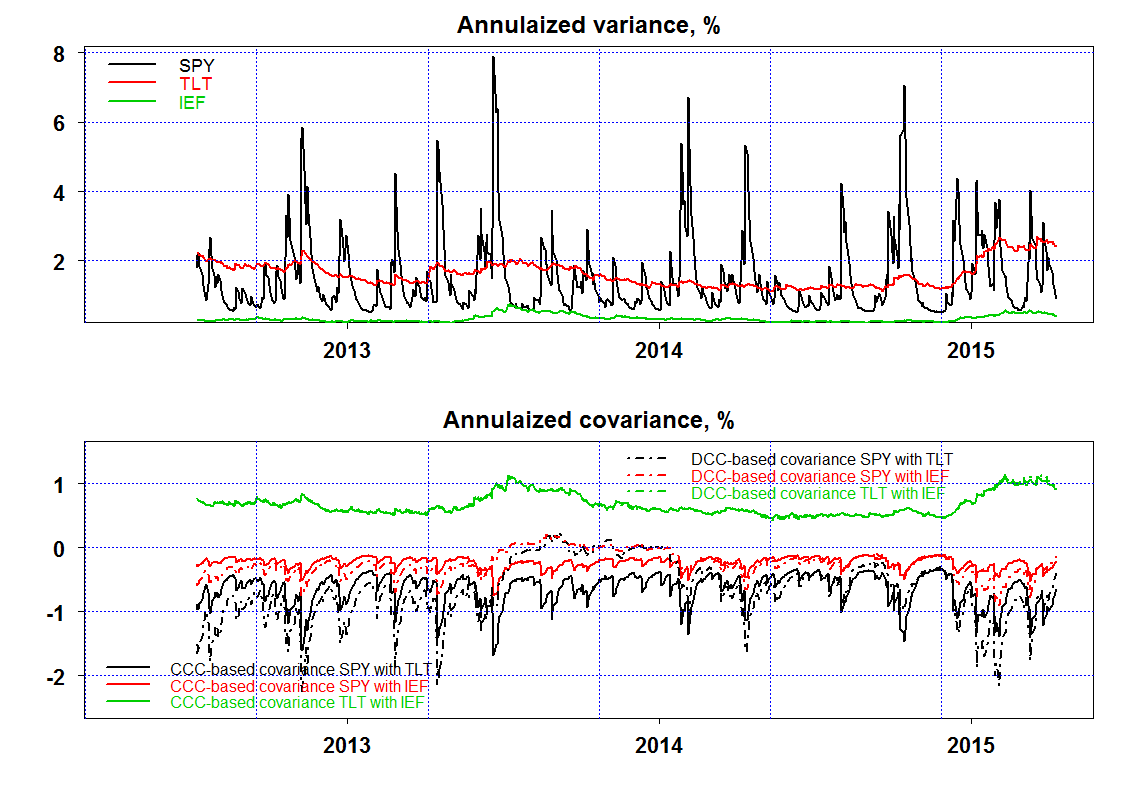

results are annualized and multiplied by 100 to move to percentage points for better readability. Plot it:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

lwd1 <- 2 ann_percent <- 252*100 par()$mar # Margins are too wide for my taste par(mfrow = c(2,1),mar=c(3.8,4.4,2.4,2.4)) plot(ann_percent*cov_ccc[1,1,]~time,ty="l",main="Annulaized variance, %",ylab="",lwd=lwd1,bty="o", cex.main=1.5,xlab="") lines(ann_percent*cov_ccc[2,2,]~time, lwd=lwd1, col=2) lines(ann_percent*cov_ccc[3,3,]~time, lwd=lwd1, col=3) grid(col="blue") legend("topleft", sym,col=1:3,lwd=lwd1,cex=1.1,bty="n",text.col=1:3) plot(ann_percent*cov_ccc[1,2,]~time,ty="l",main="Annulaized covariance, %",ylab="",lwd=lwd1,bty="o", cex.main=1.5,xlab="",ylim=c(-2.5,1)) lines(ann_percent*cov_ccc[1,3,]~time, lwd=lwd1,col=2) lines(ann_percent*cov_dcc[1,2,]~time, lwd=lwd1, col=1,lty=4) lines(ann_percent*cov_dcc[1,3,]~time, lwd=lwd1, col=2,lty=4) leg <- c(paste("CCC-based covariance", sym[1], "with", sym[2]) , paste("CCC-based covariance", sym[1], "with", sym[3])) legend("topleft",leg,col=1:2,lwd=lwd1,cex=1,bty="n",text.col=1:2) leg <- c(paste("DCC-based covariance", sym[1], "with", sym[2]) , paste("DCC-based covariance", sym[1], "with", sym[3])) legend("topright",leg,col=1:2,lwd=lwd1,cex=1,bty="n",text.col=1:2,lty=4) grid(col="blue") |

In the upper panel we have the diagonal of the covariance matrix. We see (1) the medium term bonds have the lowest volatility as expected, (2) SPY is volatile, and the variance of variance is also high (spiky-looking variance series). (3) the variance of the long end of the curve is higher than the variance of the medium term, which is a stylized fact in the yield curve literature. (4) Interestingly, the volatility of the long term bonds in US has been on the rise, probably a heightened sense of alert towards an impending(?) Fed hike in policy rates.

In the lower panel we have the covariance terms, all three of them, once estimated assuming CCC (solid) and once estimate assuming DCC (broken). For the covariance between the medium and long term bonds it does not matter much if you assume constant or dynamic correlation matrix. It does matter for the covariance terms of the SPY with the bonds however. For example, a DCC-based covariance matrix argue for almost zero covariance between stocks and bonds during mid 2013, while CCC-based suggest a more negative covariance during that period. Which is it, constant or dynamic, potentially matters a great deal for cross asset portfolio construction.

Super interesting post. Where, in the R Code, is the “TT” variable defined? (that would be the number of rows for the Uvolatility_fit object).

Best,

Rob

Thanks. Updated the code.

What you have implemented is not a “genuine” DCC but, opposite, an approximation of the DCC model by Engle (2002). In fact, you are not estimating dynamic conditional correlations by maximum likelihood, whether you calibrate them with a rolling estimator.

One of the elements leading to the diffusion of DCC is the possiblity of adopting a multi-stage estimation approach: first the variances on the marginals by univariate GARCH; then, the correlation dynamic which is driven by only two parameters in the scalar DCC representation.

Then, you should anticipate my question: why didn’t you implement the estimation of the DCC model?

If you tackle this issue, I would also suggest to have a look at Aielli (2013), JBES.

Thanks for the reference. From there (page 287) : “… the joint quasi-maximum-likelihood (QML) estimation of the DCC model is infeasible for large N. As a feasible estimator, Engle (2002) suggested a three-step procedure called DCC estimator.” As you write: first the variances on the marginals by univariate GARCH. In the code, this is “Uvolatility_fit” (U for univariate, second code snippet) and only then accounting for dynamic correlation.