This post is about the concept of entropy in the context of information-theory. Where does the term entropy comes from? What does it actually mean? And how does it clash with the notion of robustness?

Tag: Statistics

What is a Latent Variable?

This post provides an intuitive explanation for the term Latent Variable.

Test of Equality Between Two Densities

Are returns this year actually different than what can be expected from a typical year? Is the variance actually different than what can be expected from a typical year? Those are fairly light, easy to answer questions. We can use tests for equality of means or equality of variances.

But how about the following question:

is the profile\behavior of returns this year different than what can be expected in a typical year?

This is a more general and important question, since it encompasses all moments and tail behavior. And it is not as trivial to answer.

In this post I am scratching an itch I had since I wrote Understanding Kullback – Leibler Divergence. In the Kullback – Leibler Divergence post we saw how to quantify the difference between densities, exemplified using SPY return density per year. Once I was done with that post I was thinking there must be a way to test the difference formally, rather than just quantify, visualize and eyeball. And indeed there is. This post aim is to show to formally test for equality between densities.

Orthogonality in Statistics

Orthogonality in mathematics

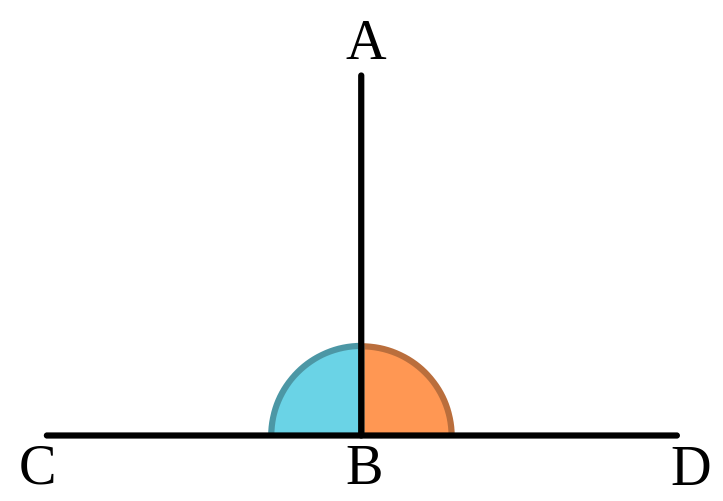

The word Orthogonality originates from a combination of two words in ancient Greek: orthos (upright), and gonia (angle). It has a geometrical meaning. It means two lines create a 90 degrees angle between them. So one line is perpendicular to the other line. Like so:

Even though Orthogonality is a geometrical term, it appears very often in statistics. You probably know that in a statistical context orthogonality means uncorrelated, or linearly independent. But why?

Why use a geometrical term to describe a statistical relation between random variables? By extension, why does the word angle appears in the incredibly common regression method least-angle regression (LARS)? Enough losing sleep over it (as you undoubtedly do), an extensive answer below.

Understanding Kullback – Leibler Divergence

It is easy to measure distance between two points. But what about measuring distance between two distributions? Good question. Long answer. Welcome the Kullback – Leibler Divergence measure.

The motivation for thinking about the Kullback – Leibler Divergence measure is that you can pick up questions such as: “how different was the behavior of the stock market this year compared with the average behavior?”. This is a rather different question than the trivial “how was the return this year compared to the average return?”.

LASSO, LASSO, LASSO

LASSO stands for Least Absolute Shrinkage and Selection Operator. It was first introduced 21 years ago by Robert Tibshirani (Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B). In 2004 the four statistical masters: Efron, Hastie, Johnstone and Tibshirani joined together to write the paper Least angle regression published in the Annals of statistics. It is that paper that sent the LASSO to the podium. The reason? they removed a computational barrier. Armed with a new ingenious geometric interpretation, they presented an algorithm for solving the LASSO problem. The algorithm is as simple as solving an OLS problem, and with computer code to accompany their paper, the LASSO was set for its liftoff*.

The LASSO overall reduces model complexity. It does this by completely excluding some variables, using only a subset of the original potential explanatory variables. Since this can add to the story of the model, the reduction in complexity is a desired property. Clarity of authors’ exposition and well rehashed computer code are further reasons for the fully justified, full fledged LASSO flareup.

This is not a LASSO tutorial. Google-search results, undoubtedly refined over years of increased popularity, are clear enough by now. Also, if you are still reading this I imagine you already know what is the LASSO and how it works. To continue from this point, what follows is a selective list of milestones from the academic literature- some theoretical and practical extensions.

Density Estimation Using Regression

Density estimation using regression? Yes we can!

I like regression. It is one of those simple yet powerful statistical methods. You always know exactly what you are doing. This post is about density estimation, and how to get an estimate of the density using (Poisson) regression.

Statistical Shrinkage

Shrinkage in statistics has increased in popularity over the decades. Now statistical shrinkage is commonplace, explicitly or implicitly.

But when is it that we need to make use of shrinkage? At least partly it depends on signal-to-noise ratio.

Top countries in poker (Test equality of proportions using bootstrap)

Every once in a while I play poker online. The poker site allows you to ask for tournament history. You get an email which contains hundreds summaries (I open several tables at once so have quite some history), a typical summary looks as follows:

Understanding False Discovery Rate

False Discovery Rate is an unintuitive name for a very intuitive statistical concept. The math involved is as elegant as possible. Still, it is not an easy concept to actually understand. Hence i thought it would be a good idea to write this short tutorial.

We reviewed this important topic in the past, here as one of three Present-day great statistical discoveries, here in the context of backtesting trading strategies, and here in the context of scientific publishing. This post target the casual reader, explaining the concept of False Discovery Rate in plain words.

Outliers and Loss Functions

A few words about outliers

In statistics, outliers are as thorny topic as it gets. Is it legitimate to treat the observations seen during global financial crisis as outliers? or are those simply a feature of the system, and as such are integral part of a very fat tail distribution?

Trim your mean

The mean is arguably the most commonly used measure for central tendency, no no, don’t fall asleep! important point ahead.

We routinely compute the average as an estimate for the mean. All else constant, how much return should we expect the S&P 500 to deliver over some period? the average of past returns is a good answer. The average is the Maximum Likelihood (ML) estimate under Gaussianity. The average is a private case of least square minimization (a regression with no explanatory variables). It is a good answer. BUT:

Optimism of the Training Error Rate

We all use models. We all continuously working to improve and validate our models. Constant effort is made trying to estimate: how good our model actually is?

A general term for this estimate is error rate. Low error rate is better than high error rate, it means our model is more accurate.

On Central Moments

Sometimes I read academic literature, and often times those papers contain some proofs. I usually gloss over some innocent-looking assumptions on moments’ existence, invariably popping before derivations of theorems or lemmas. Here is one among countless examples, actually taken from Making and Evaluating Point Forecasts:

Why statistical bootstrap

I often write about bootstrap (here an example and here a critique). I refer to it here as one of the most consequential advances in modern statistics. When I wrote that last post I was searching the web for a simple explanation to quickly show how useful bootstrap is, without boring the reader with the underlying math. Since I was not content with anything I could find, I decided to write it up, so here we go.