Preview

Constructing a portfolio means allocating your money between few chosen assets. The simplest thing you can do is evenly split your money between few chosen assets. Simple as it is, good research shows it is just fine, and even better than other more sophisticated methods (for example Optimal Versus Naive Diversification: How Inefficient is the 1/N). However, there is also good research that declares the opposite (for example Large Dynamic Covariance Matrices) so go figure.

Anyway, this post shows a few of the most common to build a portfolio. We will discuss portfolios which are optimized for:

- Equal Risk Contribution

- Global Minimum Variance

- Minimum Tail-Dependence

- Most Diversified

- Equal weights

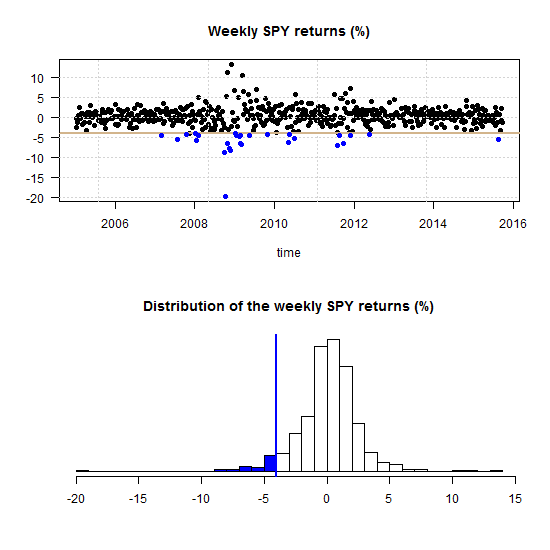

We will optimize based on half the sample and see out-of-sample results in the second half. Simply speaking, how those portfolios have performed.